Download Links

Click on the following link to start the download:

Introduction

Promotional Video

This promotional video was uploaded in January 2012 to announce the dataset and it's primary features. In the video you can see very graphically what this dataset is about.

In the following sections you will be able to find a detailed explanation of the dataset features and footage, as well as the download links of the different parts of this New Tsukuba Stereo Dataset.

About

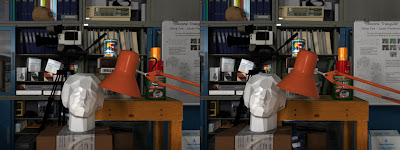

This dataset contains 1800 stereo pairs with ground truth disparity maps, occlusion maps and discontinuity maps that will help to further develop the state of the art of stereo matching algorithms and evaluate its performance. It has been generated using photo-realistic computer graphics techniques and modeled after the original "head and lamp" stereo scene released by University of Tsukuba in 1997.

The dataset is a 1 minute video sequence and also contains the 3D position and orientation of the camera on each frame, so it can also be used to develop and evaluate camera tracking methods.

This dataset is freely downloadable for Research and non-commercial purposes. The download links are provided below.

Making Off

This dataset has been created entirely computer generated in 3D using a wide variety of 3D and photo edition software.

All the objects in the scene were modeled using polygonal modelling.

For some more complex objects was also a necessity to create UV coordinate maps, in order to be able to apply to them a 2D texture created using photo edition software to add a very realistic level of detail.

The next step was to create real world looking materials and apply them to the 3D objects, and set accurate photometric lighting conditions (all of this materials and lights behave following real world physics, which make them look absolutely realistic after the rendering process)

Once all the scene was computer graphic generated, we proceeded to render it. During render time, the computer's CPU made all the calculations of how the photons emitted by the CG light would react when reaching a certain surface, and how that surface would react to this light, depending on it's glossiness levels, transparency or translucency parameters, etc...

The final result is a photorealistic video, in which we can know absolutely any parameter, distance, measurement or 3D position, due to it's existence in a computer generated world, with a defined coordinate system.

Features

1800 Stereo Pairs

Having our CG scene created and properly lit, we can add any number of stereo/regular cameras, that will also behave as real world cameras within this computer generated scene.

For this dataset, we created a stereo camera and animated it moving trough the scene.

The result is a 1 minute video with a frame rate of 30 frames per second, which gives us a total of 1800 frames for each of the stereo cameras (3600 images in total)

In future works we might consider the possibility of adding any other number or setting of cameras in order to create new interesting datasets for researching purposes.

100% accurate Depth Map

Since we are working on a completely CG generated scene, we can very easily obtain parameters such as depth maps.

Because our scene is inside a world in which we know the exact position of every coordinate, we can generate a very accurate depth map, for we can know the distance between the camera's lens and any object in the scene.

Thanks to this fact and the use of some advantages offered by the 3D software, we can generate that 100% accurate depth map, with one depth image for each of the 1800 stereo pairs in the video.

Disparity, Discontinuity and Occlusion

We can obtain this three maps from the CG generated Depth Map. There is a total of 1800 Stereo Pair maps for each class. This three maps are also available for downloading as part of the Dataset.

Camera Coordinates for Visual Camera Tracking Algorithm Validation

Additionally to the video footage, we have also generated a list with the 3D position of the stereo camera in every frame of the video. We believe this information will be very useful for Visual Camera Tracking Algorithm Validation and SLAM.

This file will be found in the download section.

Four Different Illumination Conditions

We created 4 different stereo pair sets, one for each different illumination conditions: Daylight, Fluorescent, Lamps and Flashlight.

Every one of them has the same features described in the above section 1800 Stereo Pairs.

Each Illumination Condition with exception of Fluorescent, has specific issues that will create difficulties for the tracking algorithms, that will help develop better algorithms using our dataset as training data for research purposes.

Illumination challenges:

All four illuminations can be downloaded separately. As mentioned before, the Depth, Discontinuity, Disparity and Occlusion maps are the same for any of the illuminations, due to the geometry and camera position remain the same.

Promotional Video

This promotional video was uploaded in January 2012 to announce the dataset and it's primary features. In the video you can see very graphically what this dataset is about.

In the following sections you will be able to find a detailed explanation of the dataset features and footage, as well as the download links of the different parts of this New Tsukuba Stereo Dataset.

About

This dataset contains 1800 stereo pairs with ground truth disparity maps, occlusion maps and discontinuity maps that will help to further develop the state of the art of stereo matching algorithms and evaluate its performance. It has been generated using photo-realistic computer graphics techniques and modeled after the original "head and lamp" stereo scene released by University of Tsukuba in 1997.

The dataset is a 1 minute video sequence and also contains the 3D position and orientation of the camera on each frame, so it can also be used to develop and evaluate camera tracking methods.

This dataset is freely downloadable for Research and non-commercial purposes. The download links are provided below.

Making Off

This dataset has been created entirely computer generated in 3D using a wide variety of 3D and photo edition software.

All the objects in the scene were modeled using polygonal modelling.

For some more complex objects was also a necessity to create UV coordinate maps, in order to be able to apply to them a 2D texture created using photo edition software to add a very realistic level of detail.

The next step was to create real world looking materials and apply them to the 3D objects, and set accurate photometric lighting conditions (all of this materials and lights behave following real world physics, which make them look absolutely realistic after the rendering process)

Once all the scene was computer graphic generated, we proceeded to render it. During render time, the computer's CPU made all the calculations of how the photons emitted by the CG light would react when reaching a certain surface, and how that surface would react to this light, depending on it's glossiness levels, transparency or translucency parameters, etc...

The final result is a photorealistic video, in which we can know absolutely any parameter, distance, measurement or 3D position, due to it's existence in a computer generated world, with a defined coordinate system.

Features

1800 Stereo Pairs

Having our CG scene created and properly lit, we can add any number of stereo/regular cameras, that will also behave as real world cameras within this computer generated scene.

For this dataset, we created a stereo camera and animated it moving trough the scene.

The result is a 1 minute video with a frame rate of 30 frames per second, which gives us a total of 1800 frames for each of the stereo cameras (3600 images in total)

In future works we might consider the possibility of adding any other number or setting of cameras in order to create new interesting datasets for researching purposes.

100% accurate Depth Map

Since we are working on a completely CG generated scene, we can very easily obtain parameters such as depth maps.

Because our scene is inside a world in which we know the exact position of every coordinate, we can generate a very accurate depth map, for we can know the distance between the camera's lens and any object in the scene.

Thanks to this fact and the use of some advantages offered by the 3D software, we can generate that 100% accurate depth map, with one depth image for each of the 1800 stereo pairs in the video.

Disparity, Discontinuity and Occlusion

We can obtain this three maps from the CG generated Depth Map. There is a total of 1800 Stereo Pair maps for each class. This three maps are also available for downloading as part of the Dataset.

Camera Coordinates for Visual Camera Tracking Algorithm Validation

Additionally to the video footage, we have also generated a list with the 3D position of the stereo camera in every frame of the video. We believe this information will be very useful for Visual Camera Tracking Algorithm Validation and SLAM.

This file will be found in the download section.

Four Different Illumination Conditions

We created 4 different stereo pair sets, one for each different illumination conditions: Daylight, Fluorescent, Lamps and Flashlight.

Every one of them has the same features described in the above section 1800 Stereo Pairs.

Each Illumination Condition with exception of Fluorescent, has specific issues that will create difficulties for the tracking algorithms, that will help develop better algorithms using our dataset as training data for research purposes.

Illumination challenges:

- Fluorescent: This is the default illumination. It provides no big challenge, since the lighting condition is very even in the surfaces, creating no exaggerated contrasts between light and shadow.

- Daylight: This lighting condition is very similar to Fluorescent in a way that gives a smooth illumination to the objects in the scene with the exception of the areas near the window, in which due to the intensity of the sun light, we get an over exposed image creating a challenging real world difficulty for the algorithms to solve. This data, combined with the know 100% accurate depthmap (that is the same for any of the 4 different illuminations), can lead to the development of better algorithms that will be resistant to over exposed input images.

- Flashlight: Another difficulty is added in this dataset, due to the poor illumination conditions. This scene has been rendered with an environment in penumbra, only lit by the light of a Flashlight attached to the Stereo Camera. This dataset will be specially useful for those researchers developing tracking algorithms for robots or other machines that have to navigate through dark spaces lit only by an attached flashlight or similar.

- Lamps: This scene is almost in complete darkness, lit only by the subtle moonlight that comes in through the windows and the desk lamps. This scene will present a very big challenge to perfect algorithms working with under exposed images.